ACM Multimedia 2019

ConfLab: Meet the Chairs!

The event is completed. The dataset is available below.

How it Works

What data are you contributing?

We will be collecting the following data as part of this event

Acceleration and proximity

Our newly designed MINGLE Midge wearable device records acceleration and proximity during your interactions. Acceleration readings can be used to infer some of your actions like walking and gesturing. It is worn around the neck like a conference badge.

Video

Overhead cameras will mounted to capture the interaction. These videos will be used to annotate behavior and for detection of social actions like speaking or of conversational groups.

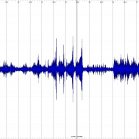

Low-frequency audio

The Mingle MIDGE will also record low-frequency audio. This low frequency is enough for recognizing if you are speaking, but not enough to understand the content of your speech, giving us valuable information without compromising your privacy. Example audio:

Survey measures

Your research interests and level of experience within the MM community will be linked to the data above via a numerical identifier.

Hayley Hung

Associate Professor: Socially Perceptive Computing

Ekin Gedik

Postdoctoral researcher: Multi-modal Social Experience Modelling

Bernd Dudzik

PhD Student: Memory as Context for Induced Affect from Multimedia

Stephanie Tan

PhD Student: Multi-modal Head Pose Estimation & Conversation Detection

Chirag Raman

PhD Student: Multi-modal Group Evolution Modelling

Jose Vargas

PhD Student: Multi-modal Conversational Event Detection

Organizers

ConfLab is an initiative of the Socially Perceptive Computing Lab, Delft University of Technology.

We have over 10 years of experience in developing automated behavior analysis tools and collecting large scale social behavior in the wild.

We are partially supported by the Dutch Research Agency (NWO) MINGLE project and the organization of ACM Multimedia 2019.